Home » How tech is transforming surgery

How tech is transforming surgery

Photo credit to: Colin Sneyd

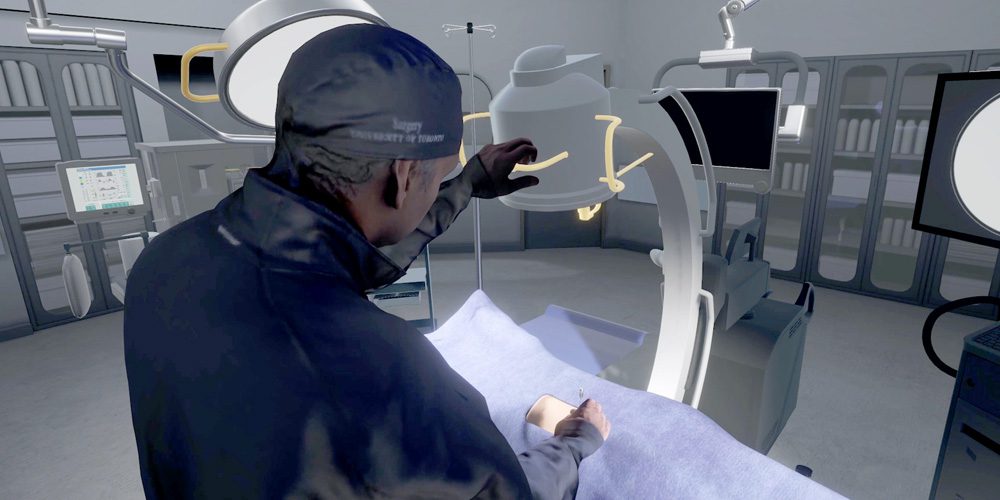

Recently, I stood over a patient in an operating room and, for the first time in my life, began to remove a kidney stone. The spacious OR was surprisingly quiet and, except for the patient — motionless and prone on the table — I was alone.

I held a surgical needle in my right hand, and with my left, manipulated a C-arm, whose fluoroscopic imaging allowed me to see exactly where the stone was located. The tools were unfamiliar, my hand far from steady, but after a few minutes, I gingerly inserted the top of the needle into the patient’s lower back. It took a bit of effort. I could feel the firmness of the flesh as the metal slowly pierced it. I could hear the surprising sound of the puncture, too: a slow, slightly sickening, squelch.

Once the needle was in, I gave it a wiggle, trying to get as close as possible to the stone. To determine how deep I’d gone, I grabbed another tool, a rectangular magnifying glass bound by a gold frame. Holding that over the insertion point, I saw that I’d pushed the needle in too far — it had gone right through the stone. Oops.

Such a magnifying device doesn’t exist in your average operating room. But then again, this entire operating room doesn’t actually exist in real life, either. Everything I could see, from the patient to the surgical needle, was part of a virtual reality simulator created by the startup Marion Surgical. I was standing in neither hospital nor med school, but rather in the Oakville kitchen of Marion’s co-founder and CEO, Ben Sainsbury. On the kitchen table lay the simulator itself: basically, a desktop P. and a portable PCNL (percutaneous nephrolithotomy) trainer.

The trainer is about two-feet-high, made of heavy white plastic and steel. Its most distinctive feature is a wand that resembles a dentist’s drill, which the user grips to “hold” the virtual surgical needle. This wand is what makes Marion’s machine distinct from other simulators; it provides the user with haptic feedback — an approximation of the tactile sensations you might experience in real life. With Marion’s system, I could feel the heaviness of the surgical tool; the resistance of the patient’s flesh. Simulating this, Sainsbury argues, is key to improving users’ accuracy. To enter the 3D immersive environment of the simulation, all I had to do was don an Oculus VR headset, have Sainsbury and design engineer Mihail Filipov configure a few settings, and place my hand on the wand. The whole kit is compact enough to fit in the overhead compartment of an airplane cabin. But the possibilities for it, from an educational perspective, are enormous.

Sainsbury and his team — which includes co-founder Rajiv Singal, chief of surgery at Toronto’s Michael Garron Hospital — began developing their simulator in 2016. Sainsbury had a background in film and gaming and was pursuing his PhD at the University of Ontario Institute of Technology. Singal is a urologic surgeon and had implemented the daVinci robotic surgical program at Michael Garron. When Singal watched Sainsbury demonstrate an early iteration of the system at a grad exhibition, he immediately saw the application for his field, and they went into business together. While simulation trainers were already being used in heart and brain surgery, there was nothing for urologic surgery, a relatively niche market but one whose procedures can be just as tricky. Sainsbury and Singal envisioned a future where students could learn techniques without the costs and risks of, say, using actual surgical facilities or working on cadavers; practising surgeons could hone or upgrade their skills; and, best of all, doctors could be trained, remotely, by clinicians anywhere in the world.

In the past, simulations were created from data gleaned from actual patients and then used for research purposes or after-the-fact analysis. Now that computing power and connectivity speeds have improved exponentially, simulations can be extraordinarily sophisticated: virtual organs can move, “breathe,” and respond to virtual surgical instruments in real time. “It went from this theoretical, kind of PhD work,” Filipov says, “to something that you can have in a classroom and use for training.” While Marion has concentrated on urology, companies, like Vancouver’s PrecisionOS, and OssoVR, based in B.C. and California, are focusing on orthopedic and other kinds of surgery. Medical simulation is now a key component in many surgical trainees’ residency programs, and the American College of Surgeons has made simulation a prerequisite for board certification in the United States.

After my first somewhat botched surgical attempt, Sainsbury booted up his latest simulation. This one featured an avatar of Singal, wearing surgical scrubs, and a female anesthesiologist in another corner of the OR The graphics were a bit better, the texture smoother. The idea is to have what Sainsbury called a “multiplayer” module so a trainee could familiarize themselves with the choreography of a particular surgery. No one, in fact, would need to learn on the job — the entire surgical team could rehearse in advance from the comfort of their own computers. I was about to mention how quiet this OR was, too — but Sainsbury was a step ahead of me. He said they were planning to build a Spotify player into the system. “Most of the surgeons I know listen to music,” he says. “It’ll be a full DJ party in there.”

Augmenting a surgeon’s reality

In the six years since Marion was founded, VR has become an increasingly mainstream, even commonplace, technology in healthcare. It’s used now in everything from aversion therapy to improving the quality of life in dementia patients. Once the pandemic made remote or telemedicine more necessary and acceptable — by the end of the first wave, in June 2020, half of all Canadians had received some form of virtual care — the potential for VR to make telemedicine more interactive, satisfying and, most importantly, diagnostically accurate, became even more apparent.

Virtual reality is just one of many futuristic tools revolutionizing healthcare. The metaverse — a still nebulous term for the technologies that are moving the internet beyond screens and into an immersive, 3D experience — has spawned a number of medical devices and processes impossible to imagine just a few years ago. While critics of the metaverse have legitimate gripes about overhyped promises (don’t get me started on NFTs) the healthcare products that are emerging have real transformational substance. Among Canadian startups alone, there are companies building an application that allows clinicians to view 3D holographic images of their patient’s bodies (Cloud DX); special cameras that let diabetic patients monitor their own skin health (Mimosa Diagnostics); and handheld tools that help detect bacterial levels in surgical wounds (MolecuLight).

It’s in surgery, though, where some of the most thrilling, and cutting-edge, work continues to be done. About five years ago, Stefan Hofer, a plastic surgeon at University Health Network in Toronto, was part of the medical team taking care of Peter Munk, the late billionaire entrepreneur and philanthropist. The two men got along well, and at one point, Munk asked Hofer if he had any dream projects that he wanted to research. Hofer, a self-professed technophile, had been thinking about how to use mixed reality — the blending of digital/virtual with real worlds to create new environments — in surgery. Munk was intrigued and gave Hofer $2 million, which allowed him to hire an engineer, Jimmy Qiu.

The two are now developing an augmented reality system that projects information from clinical scans (like MRIs) in 3D onto live patients during surgery. The aim is to create a system that colour-codes various body parts — nerves, blood vessels, the edges of a tumour — and the surgeon, wearing a headset, could issue voice commands to highlight particular parts.

“When you operate,” Hofer says, “you see what you’re looking at. But here, your view is augmented. It’s like a Google Map, or the heads-up display in a car.” While the as-yet-nameless system is still being honed by Hofer and Qiu, along with Blake Murphy, another plastic surgeon, it can already project images to less than one millimetre of their true location, precise enough for surgical purposes.

The system will make surgeons better at their jobs, but Hofer is most excited about its potential to help students or residents acquiring new skills. “When you teach something now, you don’t always know what someone is looking at or whether they see the same thing as you,” he says. They are also developing related tools that allow a surgeon or clinician in another room, or even another country, to annotate the augmented view on their own phones or tablets — telling the operating surgeon to go in one particular direction, say, or to try this here — and relay that information in real time. “It’s like science fiction,” Hofer says. “Like those movies where you have artificial intelligence in your spaceship that assists you with everything. You just ask and it helps you.”

What it can’t help doctors do (at least not yet) is make hospitals actually adopt all this cutting-edge technology. While the federal government’s April budget earmarked a $30-million investment in homegrown medical solutions, historically, Canada’s healthcare system has been notoriously slow to embrace innovation. “We’re way better at developing than adopting,” says Sam Ifergan, the founder of iGan Partners, a venture capital firm that specializes in medical devices and tech. “Because our healthcare system is very bureaucratic, nobody has the budget or gets compensated for taking risks.”

This has been Marion Surgical’s experience too. “In Canada, there’s a lot of interest. But there’s no money to buy it,” Sainsbury says. Much of his time, accordingly, has been spent in Europe, an early adopter of VR for training purposes. In late winter, he went on a lengthy tour of the continent, with stops in Greece, Belgium, Paris and England where it was used in advanced training classes. A metaverse in every Canadian hospital, however, seems light-years away.

One other obstacle to adoption is integrating standalone pieces of tech into existing OR environments in a way that improves the workflow. But for Toronto-based Synaptive Medical, developing a suite of surgical tools has been the plan from the beginning. Its Modus V robotic digital microscope (or exoscope) provides up to 12.5 times optical zoom, and its high-resolution images can also be projected on a screen in the OR so surgeons don’t need to tether themselves to a surgical microscope during a procedure. This allows them to work faster, with less physical strain, and with the entire surgical team able to see exactly what’s happening. Even better, the Modus V system features special glasses that enable surgeons to see the images in 3D, allowing for smaller less invasive incisions, and better surgery (and thus, better recovery).

The exoscope is a remarkable device all its own, but crucially, it works hand-in-hand with other Synaptive tools: the Synaptive 0.5 MRI, a smaller, light-weight MRI scanner designed for emergency rooms, and the Modus Plan, an automated whole-brain tractography (3D modelling of white matter fibre tracts of the brain) system. “The idea was to create an environment of products that deliver novel patient information, which flows from one product to the next,” says Kyle D’Arcey, the firm’s director of product management. “That way we can improve diagnostic and surgical decision-making and make the treatment of that patient that much more efficient.” While continuing to focus largely on the brain, the company is now moving into other types of imaging, like ultrasound. “We really want to be a one-stop shop for imaging information in the operating suite for precision care.”

The biggest hurdle for this technology, D’Arcey says, is the learning curve that comes with its adoption. The same can be said of the broader metaverse. How do we bring new technology into our lives in a way that makes sense and doesn’t complicate our lives but actually improves them? But also: as we spend more and more time in the virtual plane, how do we best leverage the virtual to make a substantial difference in the real?

My own foray into urologic surgery gave me a tantalizing hint, however brief and clumsy — though Hofer, the plastic surgeon, suspects that clumsiness would be short-lived. “If this takes off,” he says, “you can be pretty skilled and confident before you ever even touch a patient.”

MaRS believes “innovation” means advancing Canadian technology for the benefit of all people. Join our mission.

MaRS Discovery District

https://www.marsdd.com/

MaRS is the world's largest urban innovation hub in Toronto that supports startups in the health, cleantech, fintech, and enterprise sectors. When MaRS opened in 2005 this concept of urban innovation was an untested theory. Today, it’s reshaping cities around the world. MaRS has been at the forefront of a wave of change that extends from Melbourne to Amsterdam and runs through San Francisco, London, Medellín, Los Angeles, Paris and New York. These global cities are now striving to create what we have in Toronto: a dense innovation district that co-locates universities, startups, corporates and investors. In this increasingly competitive landscape, scale matters more than ever – the best talent is attracted to the brightest innovation hotspots.